Data Hack Tuesday

Tips & Tricks for Your Data

Brought to you every Tuesday.

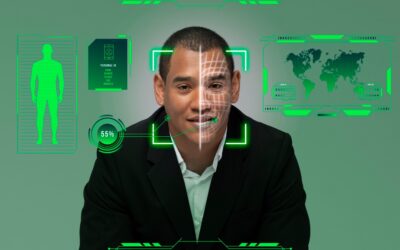

Biometric Authentication: Advancements and Challenges in Implementation

July 9, 2024

This article will explore the various types of biometric authentication, recent technological advancements, the hurdles in implementation, and the future prospects of this innovative technology.

View More

Driving Product Development Using AI

May 15, 2024

By leveraging AI, companies can streamline their development processes, saving time and resources. This article explores the exciting ways AI is being integrated into every stage of product development, from ideation to launch and beyond.

View More

Exploring Generative AI in Content Creation: A Data Professional’s Handbook

May 3, 2024

With the help of generative artificial intelligence (AI), content production may be approached in a whole new way by allowing computers to generate innovative and creative results in a variety of fields.

View MorePython: Streamlining Data Analysis with Pandas — Tips and Tricks

April 18, 2024

Pandas: Tips and Tricks – Pandas provides powerful tools to streamline your workflow and derive meaningful insights from your data.

View More

LLMs Decoded- Leveraging Large Language Models for Big Data Solutions

March 19, 2024

In the era of information overload, LLMs offer innovative tools for organizing, accessing, and leveraging vast amounts of company knowledge.

View More

Generative AI in Healthcare_ Engineering Innovative Solutions for Diagnosis and Treatment

March 7, 2024

Going deeper into the role of generative AI in healthcare, we’ll explore its applications across different medical domains, its impact on patient care, and the challenges and opportunities it presents for the future of medicine.

View More

Data Infrastructure – Making the Case for Immutable Infrastructure in Your Organization

February 20, 2024

By embracing immutable infrastructure principles, organizations can unlock many benefits, including improved reproducibility, enhanced security, streamlined rollback processes, and scalability advantages.

View More

Making an Impact as a Prompt Engineer

February 6, 2024

The contributions of Prompt Engineers will be crucial in navigating the complexities of this relationship, steering the development of AI towards a future that reflects our shared aspirations and values.

View More

GANs for Engineers_ A Guide to Developing Unique Art Creation Algorithms

January 23, 2024

The use of GANs in art creation is an intriguing marriage of science and creativity, providing artists and designers with new tools for exploring novel expressions.

View More