Building a machine learning model, like many other things, requires a series of steps and processes. When developing models, deployment is usually the last stage, and often-times also the most neglected. According to a VentureBeat article, only 13% of data science projects make it to production, which means that 87% of models built never get

deployed.

The disconnect between data science and engineering is a major contributor to this. While engineers strive to keep things alive, stable, and running continuously, data scientists prefer to experiment and figure out the best way to build models and improve their accuracy, even if it means breaking things in the process. This has resulted in very limited collaboration between the two parties. In the end very few models are deployed. Other reasons include a lack of leadership support and access to the appropriate data.

You may not be too concerned with these issues right now, but it is critical to learn how to build models, deploy them, and have them tested in the real world. In this article, you will learn how to deploy a machine learning model on an AWS EC2 (Elastic Cloud Compute) Instance.

Model Deployment: A Scenario

Here’s a scenario to help you understand the importance of model deployment. Yua is an Osaka University student studying Computer Science in her Junior Year. As Yua is interested in Machine Learning and Artificial Intelligence, she decides to build a model that can answer questions when context is provided for her demo project.

Yua discovered while doing research that there are already pre-trained models built by Hugging Face that could help her get started quickly and achieve her goals. Seeing this, she decides to put the pre-trained models to the test by having her friends ask questions and provide context while observing the responses to gain a better understanding of how to tune the model to achieve her goals.

To accomplish this, she decides to make the model available using Flask; a Python framework, and AWS; an Amazon cloud computing platform. Yua then reaches out to you (someone well versed in Python) for assistance in building this demo because her Python skills are currently limited. How would you approach it?

Prerequisites

You need to have the following before you can proceed to build the project:

1. The Flask library installed on your machine. You can install it with Python using pip install flask.

2. The Transformers library installed on your machine. You can install it with Python using pip install transformers or refer to the official documentation

for more options.

3. The Pytorch library also installed on your machine. You can find the

appropriate installation command here, but if you’re working on a Linux machine, you can simply use pip3 install torch torchvision torchaudio — extra-index-url https://download.pytorch.org/whl/cpu.

Building The Application

To begin, import the Flask class from the flask library and define the endpoint / , which will return some text if a request is sent to it.

from flask import Flask

app = Flask(__name__)

@app.route(‘/’)

def home():

return “Hi, my name is MIA, I answer questions based on contexts. “

if __name__ == “__main__”:

app.run(host=”0.0.0.0″, port=8000)

Next, construct Yua’s model demo using the pre-trained models from the transformers class:

from transformers import pipeline

question_answerer = pipeline(“question-answering”, model=’distilbert-base-cased-

distilled-squad’)def question_answer(context, question):

result = question_answerer(question=”What is a good example of a question answering

dataset?”, context=context)

score = result[‘score’]

answer = result[‘answer’]return score, answer

What’s going on here?

- The transformers library is used to import the pipeline class.

- The pipeline is then created with the task question-answering and the model distilbert-base-cased-distilled-squad.

- Finally, the question-answer function is defined, which accepts two

parameters- context and question, and returns the score as well as a possible answer to the question. The score here denotes the likelihood that the model is correct.

After that, update the previous endpoint to allow users to make POST requests with a context and a question. You will send this request to the question-answer function, which will return the score and answer.

@app.route(‘/’, methods=[‘POST’, ‘GET’])

def home():

if request.method == ‘POST’:

data = request.json

context = data[‘context’]

question = data[‘question’]

score, answer = question_answer(context, question)

score = round(score, 2)

message = f”{question} Answer: {answer.capitalize()} \n I am {score*100} percent

sure of this”

return message

else:

return “Hi, my name is MIA, I answer questions based on contexts.”

As previously stated, the endpoint takes two parameters from the POST request, context and question, and uses the question-answer function to calculate the corresponding score and answer.

The final code should look like this:

from flask import Flask, request, render_template

from transformers import pipeline

app = Flask(__name__)question_answerer = pipeline(“question-answering”, model=’distilbert-base-cased-

distilled-squad’)def question_answer(context, question):

result = question_answerer(question=”What is a good example of a question answering

dataset?”, context=context)

score = result[‘score’]answer = result[‘answer’]

return score, answer

@app.route(‘/’, methods=[‘POST’, ‘GET’])

def home():

if request.method == ‘POST’:

data = request.json

context = data[‘context’]

question = data[‘question’]

score, answer = question_answer(context, question)

score = round(score, 2)

message = f”{question} Answer: {answer.capitalize()} \n I am {score*100} percent

sure of this”

return message

else:

return “Hi, my name is MIA, I answer questions based on contexts.”

if __name__ == “__main__”:

app.run(host=”0.0.0.0″, port=8000)

Finally, save the file as main.py , open your terminal, and run python main.py to launch your flask application.

If you were successful, you should receive the following response:

Downloading (…)lve/main/config.json: 100%|

█████████████████████████████████████████████████████████████████████████████| 473/473

[00:00<00:00, 72.5kB/s]

Downloading (…)”pytorch_model.bin”;: 100%|

████████████████████████████████████████████████████████████████████████████|

261M/261M [00:00<00:00, 291MB/s]

Downloading (…)okenizer_config.json: 100%|

███████████████████████████████████████████████████████████████████████████| 29.0/29.0

[00:00<00:00, 12.4kB/s]

Downloading (…)solve/main/vocab.txt: 100%|

████████████████████████████████████████████████████████████████████████████|

213k/213k [00:00<00:00, 659kB/s]

Downloading (…)/main/tokenizer.json: 100%|

███████████████████████████████████████████████████████████████████████████| 436k/436k

[00:00<00:00, 1.08MB/s]

* Serving Flask app ‘main’

* Debug mode: on

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on http://127.0.0.1:8000

Press CTRL+C to quit

* Restarting with statDebugger is active!

Debugger PIN: 816–732–827

You can open a second terminal and test the model’s functionality by running the following script:

curl — location ‘localhost:8000’ — header ‘Content-Type: application/json’ — data ‘{

“context” : “Mary had a little lamb”, “question” : “What did Mary have?”}’

If successful, you will get an output similar to this

What did Mary have? Answer: Mary had a little lamb

I am 52.0 percent sure of this

It provides an answer to the question as well as the probability that its answer is correct. Now that you have verified that the program is running correctly, the next step will be to deploy it to AWS EC2.

Deploying To AWS EC2 Instance

To deploy the app to AWS, you must first create and launch an instance. If you’re not sure how to set up an EC2 instance, you can follow this [step-by-step guide]. Here are a few things to consider as you configure your instance:

- Set Ubuntu as your preferred operating system

- The default volume size is 8GB, but due to the size of the transformers, it must be increased to at least 15GB.

- Lastly, allow HTTPS and HTTP access from any location.

Once your instance is up and running, you can use PuTTY or OpenSSH to connect to it from your terminal.

Cloning The GitHub Repo

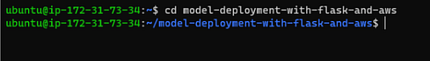

To begin deployment, clone the GitHub repository using

git clone https://github.com/EphraimX/model-deployment-with-flask-and-aws

Then, using cd model-deployment-with-flask-and-aws, navigate to the directory.

Creating A Virtual Environment

Following the cloning of the repository, the next step is to create a virtual

environment and install the necessary packages. To accomplish this:

- Update current packages by running sudo apt-get update.

- Next, run sudo apt-get install python3-venv to install Python.

- Next, run python3 -m venv env to create the virtual environment.

- Finally, run source env/bin/activate to activate the virtual environment.

After that, proceed to install the necessary packages. This can be accomplished by first creating the ‘requirements.txt’ file by running:

pip3 freeze > requirements.txt

and then installing these packages with

pip3 install -r requirements.txt

You may need to wait a few minutes for the libraries to be installed due to their size and your network speed.

Once installed, you can run a test to see if the installation was successful by

running:

python main.py

Installing Gunicorn

Flask runs on Werkzeug’s development WSGI server, which is only for development and cannot be used in production. You must install the gunicorn package to run the Flask app in production mode.

Run pip3 install gunicorn in your terminal, followed by gunicorn -b 0.0.0.0:8000 main:app to connect your Flask app to the gunicorn service.

To keep your server online at all times, set gunicorn to restrart or reboot whenever the EC2 instance is restarted. To do so, open a new terminal, log into your EC2 instance, and type:

sudo nano /etc/systemd/system/helloworld.service

in your terminal and paste the script below into the file

[Unit]

Description=Gunicorn instance for Model Deployment with Flask and AWS

After=network.target

[Service]

User=ubuntu

Group=www-data

WorkingDirectory=/home/ubuntu/helloworld

ExecStart=/home/ubuntu/helloworld/venv/bin/gunicorn -b localhost:8000 main:app

Restart=always

[Install]

WantedBy=multi-user.target

Once finished, press Ctrl+S to save the file and Ctrl+X to exit it.

After that, restart and enable the service by running the following commands:

sudo systemctl daemon-reload

sudo systemctl start helloworld

sudo systemctl enable helloworld

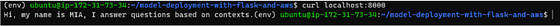

You can check if the app is still running using :

curl localhost:8000

If it’s still running, you’ll get something like:

Setting Up NGINX Server

To receive external requests, you’ll need a webserver like NGINX to accept and route them to Gunicorn.

To get started with the NGINX server,

- Run sudo apt-get nginx on your machine.

- Next, run sudo systemctl start nginx to start the server.

- Next, run sudo systemctl enable nginx to enable the service.

- You must edit the default file in the sites-available folder to connect the

NGINX server to Gunicorn. To accomplish this, execute sudo nano

/etc/nginx/sites-available/default . - Then, at the top of the file, insert the following code (below the default

comments)

upstream flaskhelloworld {

server 127.0.0.1:8000;

}

- Finally, add a proxy pass parameter to flaskhelloworld at location /

location / {

proxy_pass http://flaskhelloworld;

}

After completing these steps, restart NGINX with ‘sudo systemctl restart nginx’, and your application should be operational.

Testing Your Application

To see if your application is up and running and accessible from anywhere, open your terminal (you do not need to log into your EC2 instance) and type:

curl — location ‘http://34.204.60.216′

If successful, you will get the response:

Hi, my name is MIA, I answer questions based on contexts.

Run the following commands to put the model’s predictions to the test:

curl — location ‘http://34.204.60.216′ \

— header ‘Content-Type: application/json’ \

— data ‘{

“context” : “Python was created by Guido van Rossum, and first released on

February 20, 1991. While you may know the python as a large snake, the name of the Python programming language comes from an old BBC television comedy sketch series

called Monty Python’\’’s Flying Circus.”,

“question” : “When was Python first released?”

}’

Here’s what I got in response:

When was Python first released? Answer: Python as a large snake

I am 18.0 percent sure of this

Oops, I guess the model still requires some fine-tuning. You can experiment with different contexts and questions to see how well the model responds, but for now, you have successfully assisted Yua in building her demo project. Congratulations!

Conclusion

You successfully deployed a Hugging Face machine learning model on an AWS EC2 instance using Flask in this article. To learn more about AWS, you can do so by visiting their documentation here. Stay tuned for more articles like this one!